Inferencing on a Raspberry Pi

Hardware Requirements

This section requires a Romi or a Raspberry Pi that is running the WPILibPi Image.

Instructions to setup WPILibPi can be found here.

Other hardware that is required is:

A USB Camera

A Coral TPU (Highly Recommended)

Note

A Coral Edge TPU is a USB Accelerator that the Raspberry Pi uses as a co-processor to improve its performance while inferencing. In our experience it has shown to improve performance by around 9 times.

Uploading the Model to the Pi

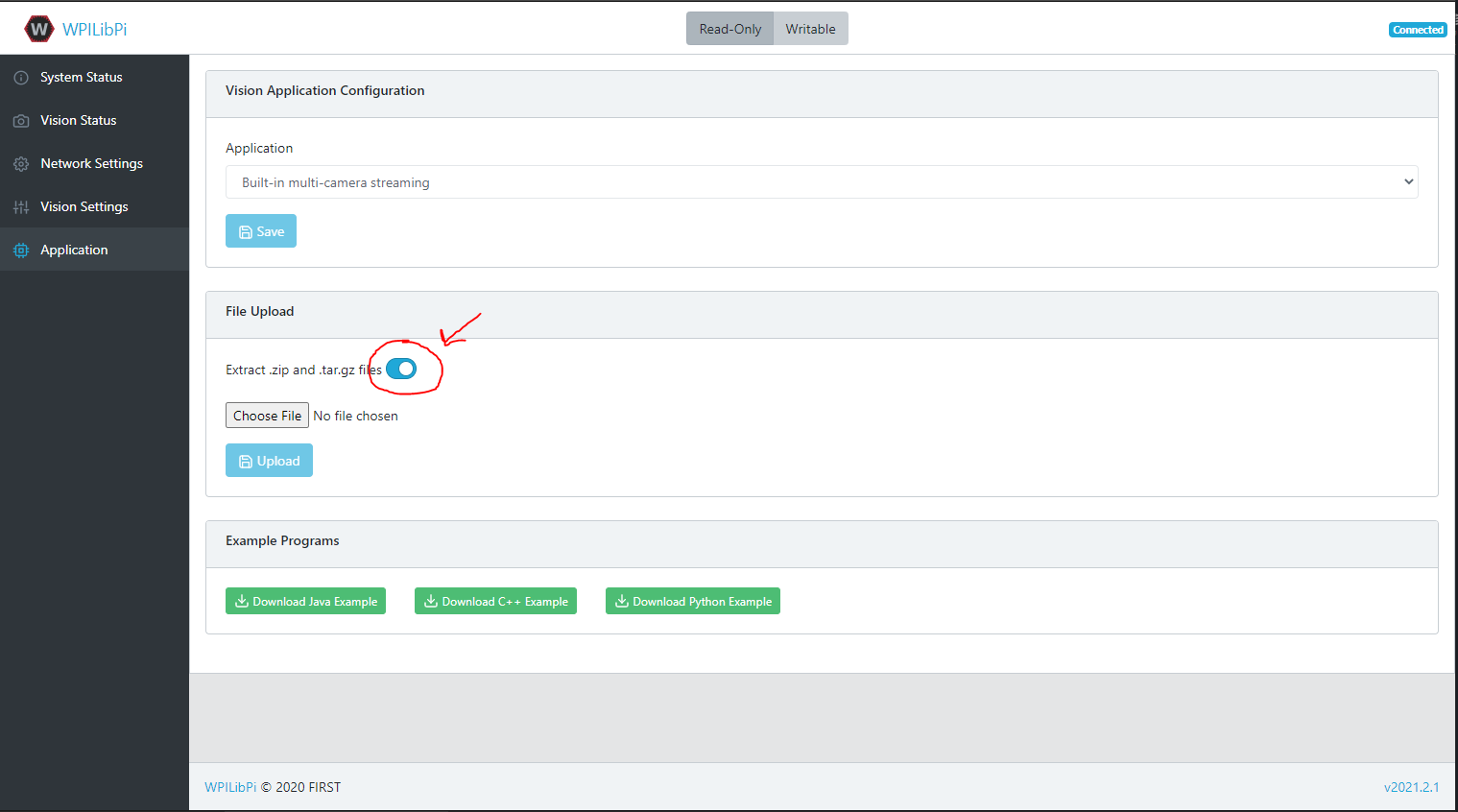

To upload your tflite model to the Raspberry Pi, you must connect to it and open the Web-interface. Select the Application tab, go to the File Upload section and turn the Extract slider on.

Once this is checked, you can upload the zip that you downloaded from Axon onto the Pi.

Inferencing

Once the model has been uploaded, now the Pi needs python code to utilize it. Here is a link to the provided inference script through the release page: Github

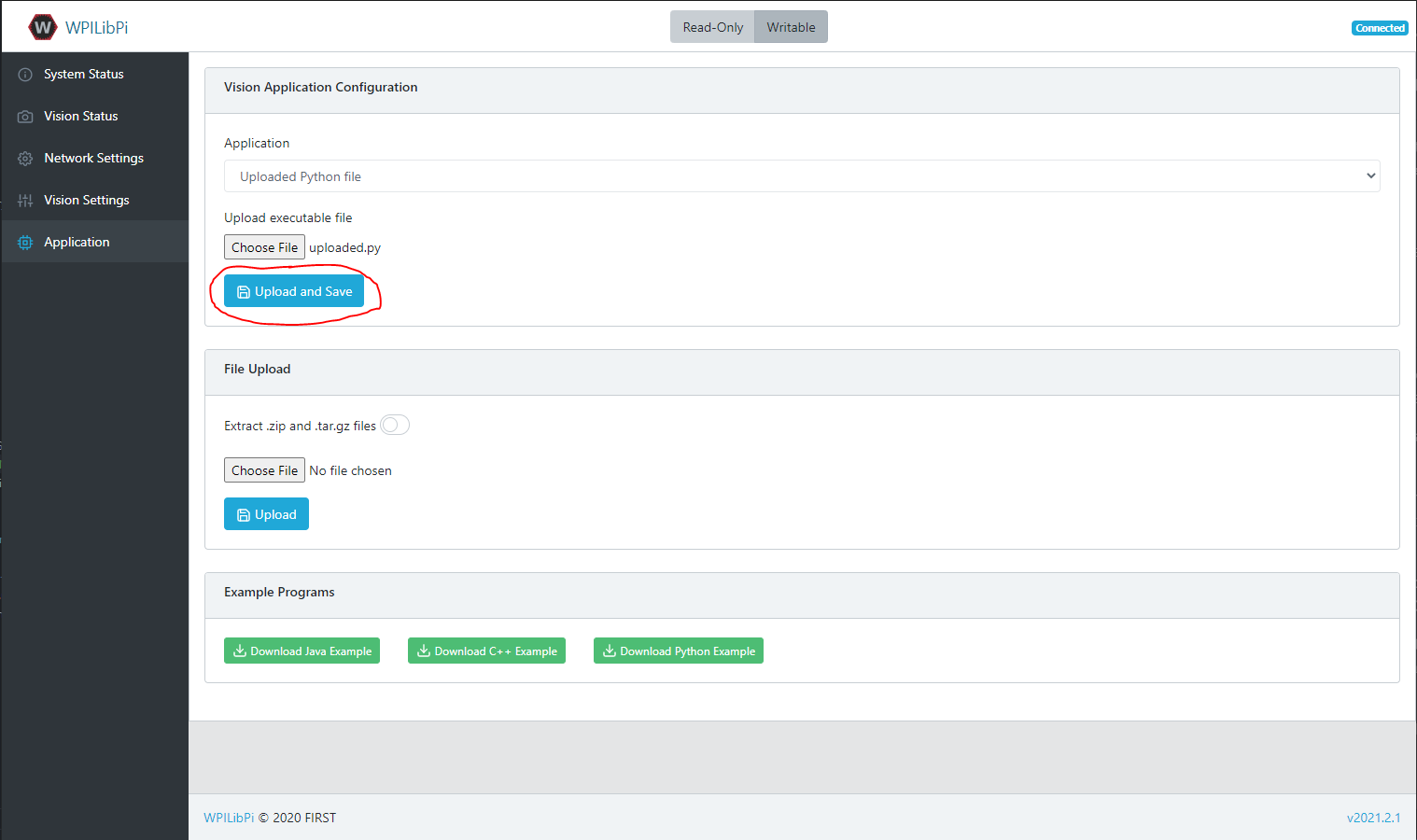

Once this is downloaded, utilize the Vision Application Configuration section to upload the python file.

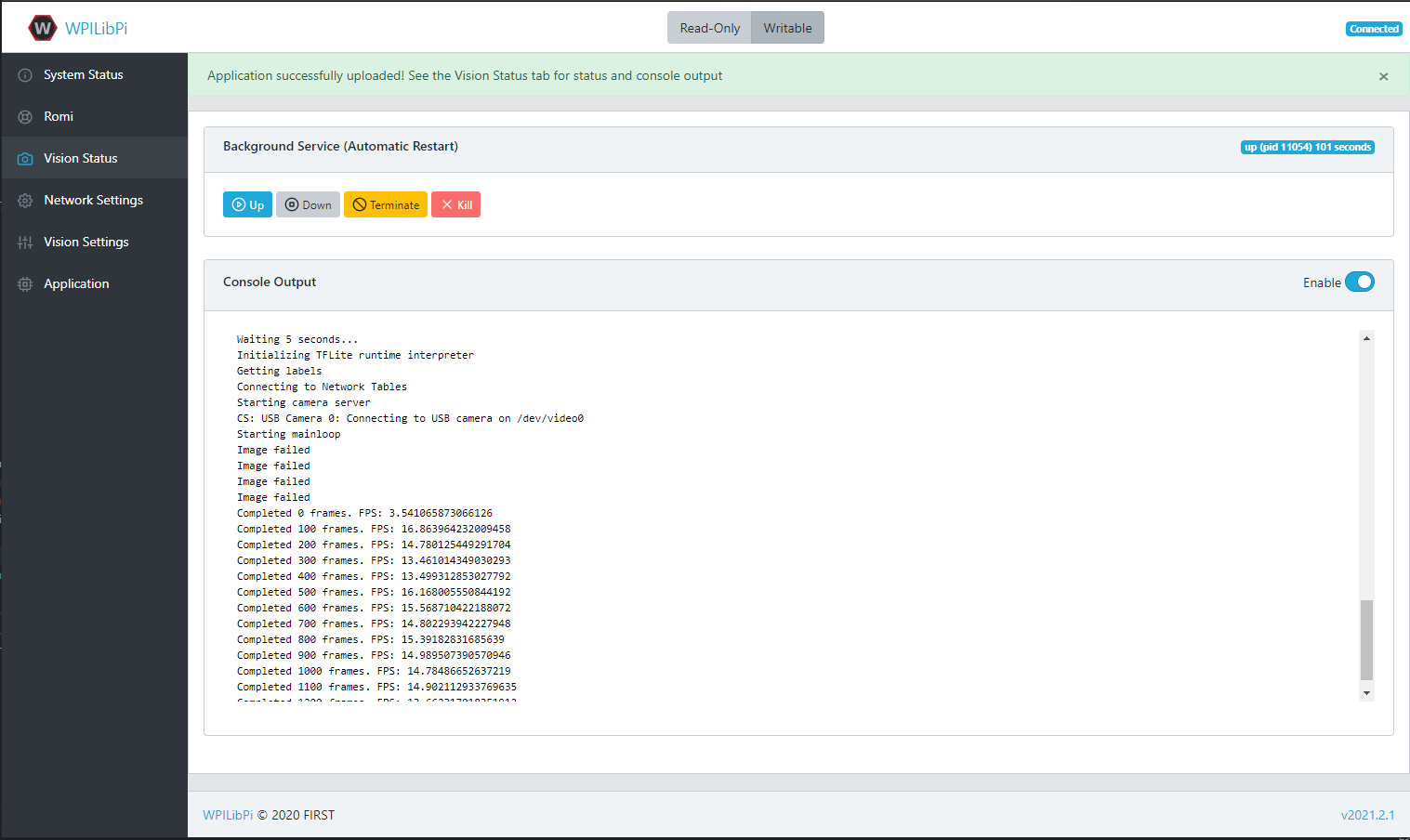

Once uploaded, utilize the Vision Status tab to check on the state of the script. You should be able to see an update with a framerate indicating that everything is working.

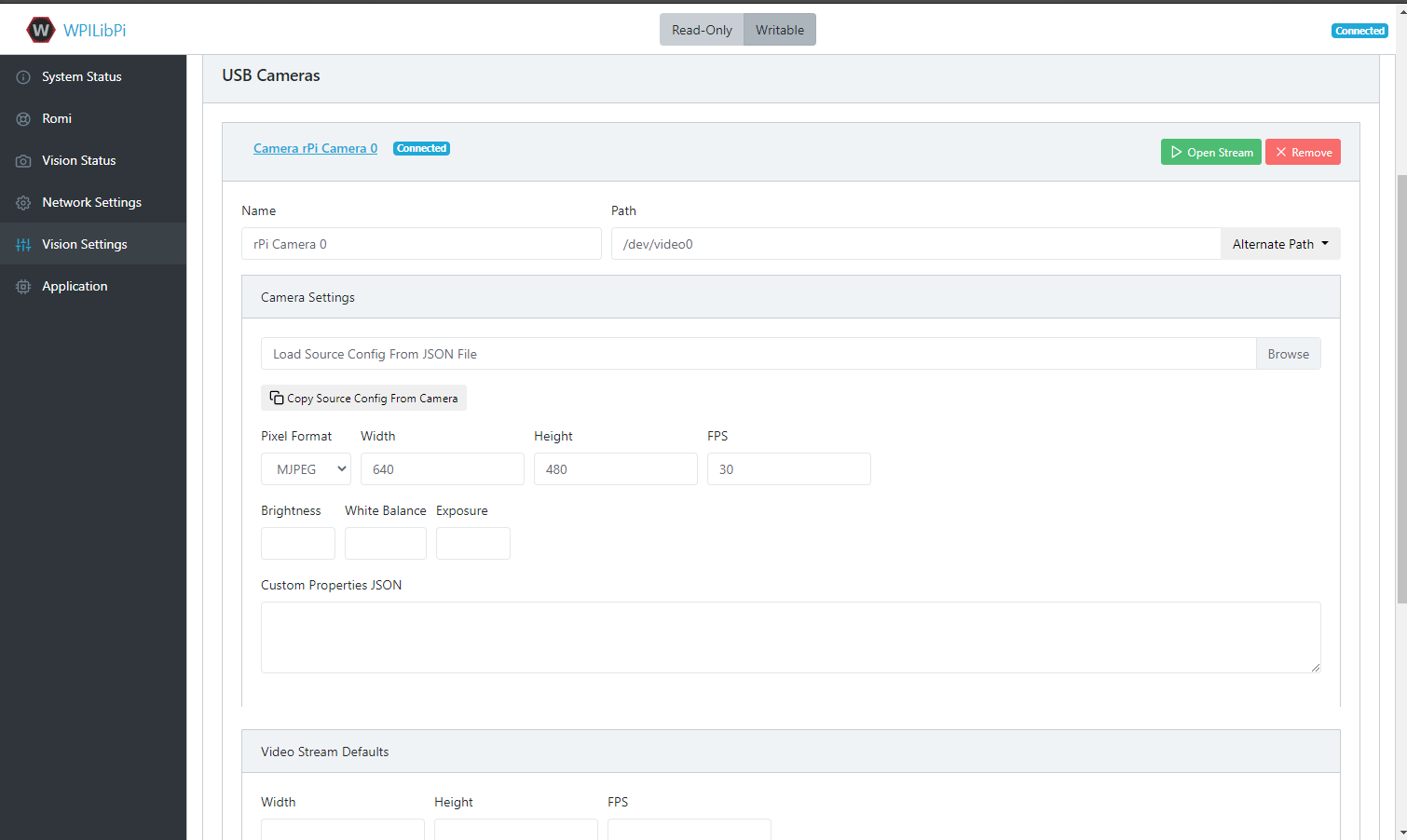

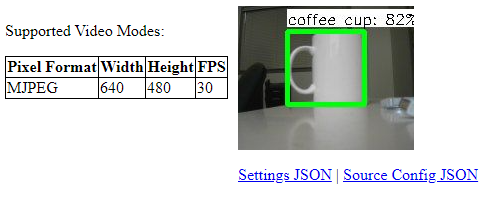

Head to the Vision Settings tab. First click on the camera stream and change the resolution to be greater than 300 pixels by 300 pixels (e.g. 640 by 480).

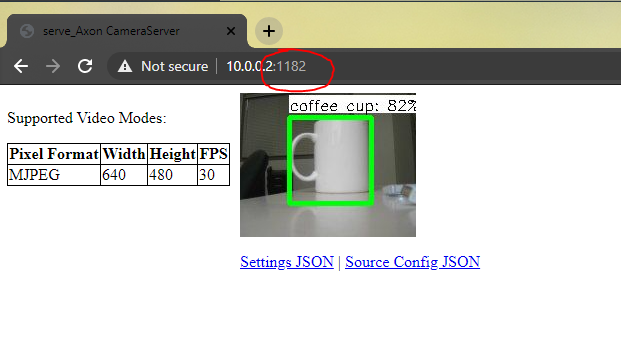

Then click on Open Stream to view the camera stream. Increase the port number at the top by one each time to move through the various camera streams until you arrive at the desired one. The default port is always at 1181, and the additional streams will start at 1182.

Then you can see the output stream from the Machine Learning Model. You should be able to see the Raspberry Pi identifying objects!

Network Tables

Note

When using a Romi, robot code must be running on the simulator to receive network tables data.

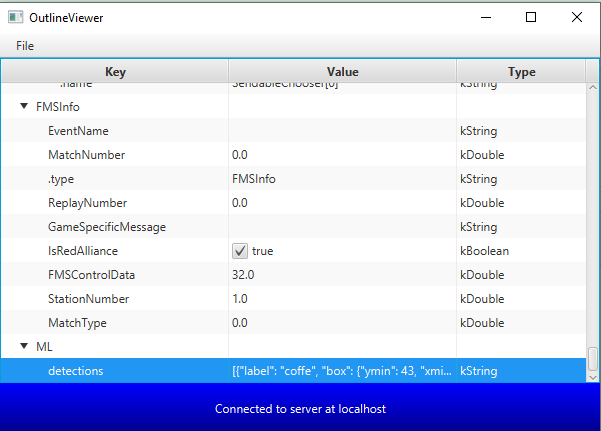

The python script from the releases page that is responsible for inferencing, also pushes that data to network tables. To see the results, one can use the OutlineViewer tool.

Note

Use Server Location localhost when running on a Romi